I have spent the past few weeks staring at what seem to be digital ghosts; they look human, speak the way we do, and cause anger and despair. But they are not real. They are machines wearing human skin.

Something is shifting in Pakistan’s social media landscape. It is not merely a rumour. It is not the old cycle of misinformation. It feels darker. Artificial intelligence is reshaping reality frame by frame until the internet resembles a hall of mirrors.

For me, it began on November 8, when an account called ‘PakVocals’ posted a video on X that claimed to show journalist Benazir Shah dancing in a nightclub. The caption was cruel. It tried to mock her by using derogatory comments against her professional credibility. At the time of writing this piece, that video had garnered more than half a million views.

It did exactly what it was designed to do: turn a journalist into a target. Mission accomplished!

To most viewers, the clip probably passed as real, but for me, something felt off.

When the mask slips

I opened the clip in DaVinci Resolve — a video editing software that can be downloaded online — and watched it frame by frame because deepfakes are rarely perfect; they usually leave behind little crumbs of evidence.

And at the 30th frame, I found it!

For a split second, the skin tone flickered. The outline of the face rippled like water. The mask, it seems, had slipped — a clear sign of face manipulation had thus been identified.

I then used Google Lens, another free online tool, to scan multiple screenshots of the deepfake video. The aim was to identify the source of the video, and success was clearly in sight when several search results popped up, one after the other.

The body in the video didn’t belong to Benazir Shah but to Indian actress Jannat Zubair Rahmani — the clothes were the same, and so was the lighting. The only thing the fakers had changed was the face.

On X, Shah called out the deepfake video and noted that the account was followed by the information minister of the incumbent government. That is when the story took a weird turn. ‘PakVocals’ issued an apology, citing religious fear of slander. But despite the pious language, the video is still there, which shows that their true intention was to damage someone’s reputation.

On November 18, another X account by the name ‘Princess Hania Shah’ posted a new deepfake of Benazir Shah and accused her of being a “traitor”. This deepfake edit was a lazy attempt, I thought to myself. But the intent, again, was dangerous.

This time, I didn’t even need editing software. I used Google Lens to put the fake video side-by-side with the original footage I found online. They were perfect mirrors — same moves, same lighting, just a different face. It took five minutes to prove it was a lie. Yet, more than 180,000 people had already watched it.

Fact-checking — a necessity

X, formerly Twitter, has a system called “Community Notes” designed to flag fake content. But it has failed too many times. Deepfake videos are still up on the social media platform without a warning label.

What’s worse is that when users asked X’s own AI chatbot, Grok, to verify the videos, it failed to identify them as AI-generated content, revealing the limitations of billion-dollar algorithms. Simply put, we can’t rely on tech giants to protect us. If third-party fact-checkers fail to do their job, nobody will.

With every passing day, fact-checking is becoming a necessity, especially during times of conflict and wars, because AI-manipulated content is not just consumed by ordinary people but also by mainstream news media.

During the recent Israel-Iran conflict in mid-2025, a viral post on X allegedly showed analysts at an Israeli news channel fleeing their studio after a purported strike by Iran. While the footage initially seemed convincing, closer inspection revealed ghostly movements, immaculate camera panning and flat audio. But many Pakistani news outlets aired the clip as an authentic event.

After the Benazir Shah deepfakes, just a few hours later, I found another deepfake video. It claimed to show Pakistani soldiers abusing a Baloch woman in a desert setting. The account that posted the clip, namely ‘Yousuf Bahand’, added a taunt: “Some will say this is fake.”

He was right. It is a fake!

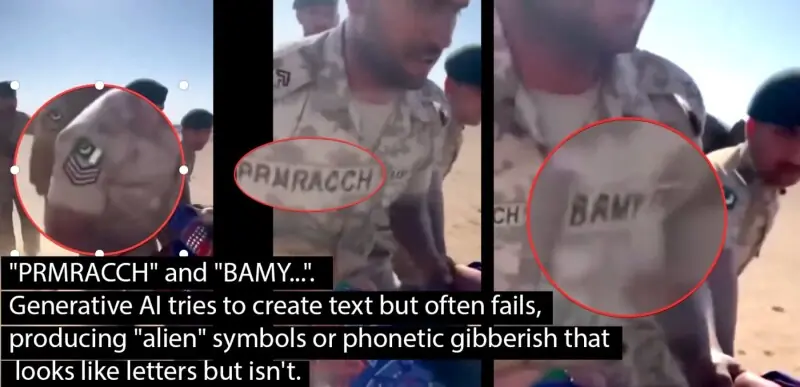

I went back to the frame-by-frame analysis. The clues were in plain sight. The name tags on the soldiers’ uniforms read “PRMRACCH” and “BAMY” — a classic AI mistake in writing text, hallucinating words while producing gibberish.

The clip was crammed with other visual inconsistencies as well. For one, one of the hands of the supposed soldier in the video appeared to be merged into the hands of the woman.

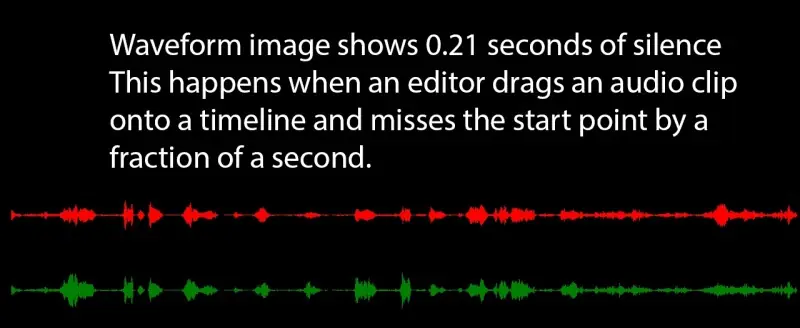

Not just the video, but the audio too gave it away. When the soldier yelled “damn it”, it came out in a clean American accent, nothing like what you’d expect in that setting. So I ran the clip through FFMPEG, a command-line tool that converts audio or video formats.

The data showed something you never see in a real desert recording: 0.21 seconds of total silence at the very start. In a windy, open environment, the mic always picks up ambient noise the moment it switches on. That dead air only happens when someone pastes the audio track onto the video later.

And this is not a one-off episode of playing on real issues. I remember, for instance, the wide circulation of clips showing cows drowning during the 2025 Punjab floods. Instead of authentic chaos, the videos presented a sterile calm: unnaturally still, perfectly framed, and entirely lacking the expected camera tremor or human sounds.

Unfortunately, most viewers appeared to stop at the initial shock of the disaster, arguably failing to question the engineers behind the synthetic content.

The ‘AI Slop’

A recent BBC investigation revealed that Pakistan has become a global hub for “AI Slop”. Creators appear to be mass-producing fake content, using real-world settings to spread lies, just to game algorithms and earn money. The incentive is simple: virality pays, truth does not.

Until early 2024, AI videos were mostly a glitchy novelty shared by enthusiasts, but the landscape arguably shifted with Sora (OpenAI), which created a sense of competition in the market with heavy hitters such as X’s Grok and Google’s Veo.

But to their credit, Google just stepped in with a partial remedy: its Gemini chatbot can now flag images created with Google’s own AI tools by detecting invisible SynthID watermarks, a technology that embeds invisible watermarks into AI-generated content like images, audio, and text to identify it as machine-created.

The tools I used to expose these lies are free. Anyone can use them. But unfortunately, the tools to make these lies are just as accessible. That is the frightening symmetry: anyone with an ulterior motive, whether financial or otherwise, can fabricate a reality, but not everyone will have the incentive to break through such fabrications.

AI isn’t a futuristic threat anymore. It is a street-level weapon. The next viral clip you see might not be real at all, yet the truth still sits there beneath the distortions. You only have to slow down long enough to find it. Sometimes it is a flicker on a cheek. Sometimes it is a melting hand. Sometimes there is a silence in the waveform.

The ghosts can be unmasked, but only if we choose to look closely.

from Dawn - Home https://ift.tt/o54Jzc6

Comments

Post a Comment